For the past year and a half, I’ve been working with an unofficial team at Microsoft to develop audio-based games—games that can be played with just the sound. Now I’m happy to announce that Ear Hockey, a game which I’ve personally been working on, has been published to the Microsoft Store for PC.

This post is about the process of creating the game. For detailed information about the game itself (and the download link), see our project profile on the Garage website.

Project background

I joined this team at a company-wide hackathon event in the summer of 2017 after reading a bit about their project online. The team had previously done a few hardware-based accessibility projects and was now turning to software. Accessibility, in the field of technology, is the quality of being usable by many different people, especially people with disabilities.

The team’s goal for this event was to experiment with features in video game environments that might be useful to people who are blind or low-vision. So, namely, it was about learning how to convey game information with audio alone, not visuals. The team called themselves Audio Augmented Reality Gaming, because they were making games that utilized the 3D spatial audio technology found in Microsoft’s augmented reality headset, the HoloLens (however, development soon branched from HoloLens to PC).

I took interest in this project because it was an opportunity to create completely new experiences. In order to play and win the games, sighted players would need to hone their auditory perception, a skill we rarely focus on. And blind players would be able to enjoy fun new experiences through a medium in which they’re already comfortable (the number of audio-based games on the market is currently very small).

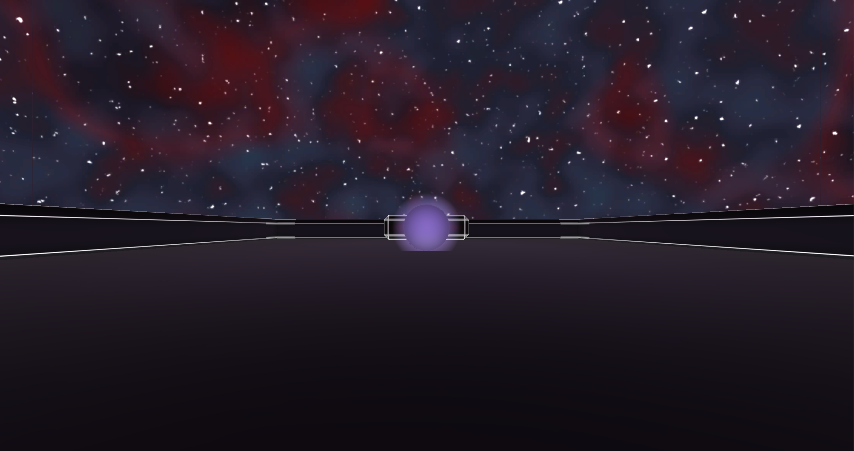

We were a group of about eight, and we split ourselves into sub-groups that each worked on different mini-games. I went with “audio pong,” a prototype that one of the teammates, Boris, had put together a few days earlier. It was a Pong-like game, with two paddles that would hit a ball back and forth. The ball emitted a constant humming sound and used spatial sound technology in the game, so theoretically one could guess its location by listening. During the hackathon week, I added new features and improved the game’s mechanics.

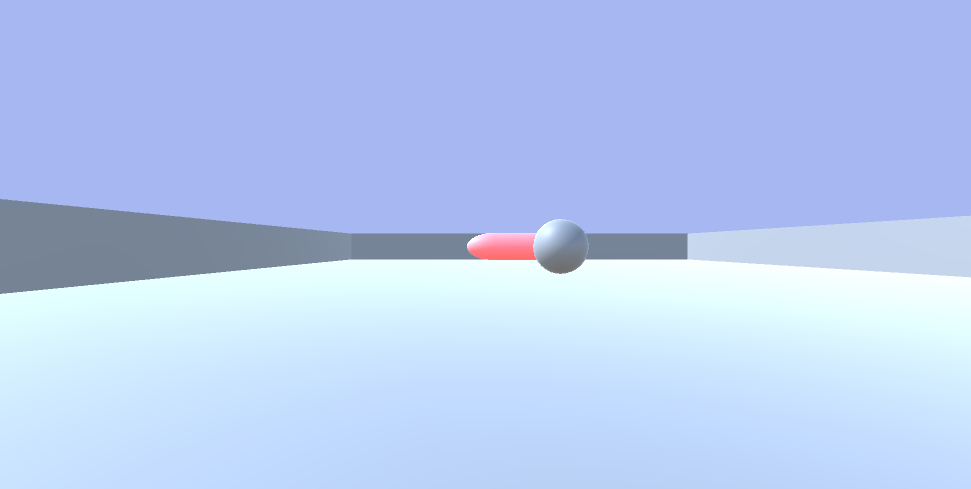

“audio pong” – a very bare prototype of our game. The camera looks out from one paddle, and the red shape is the opponent’s paddle.

In the end, we developed a total of six modest game prototypes. They were popular at the project expo at the end of the week, and the event sponsors presented us with the Ability Hack award—favorite accessibility-related project.

Along with the award came a lot of positive attention from Microsoft’s accessibility organization. They were excited about the games and wanted to do whatever they could to help us develop them to completion. We decided to continue developing two of our prototypes: “audio pong” and “echo nav,” a room-escape game that used the revolutionary feature of virtual echolocation (I hope to tell you more about it someday soon). Of course, after the hackathon event, further participation was completely optional. A few team members declined to continue, a few transferred out of Microsoft, and in the end we were five: Boris and I would work on “audio pong,” two others on “echo nav,” and one would act as manager, coordinator, spokesman, and point-of-contact for our collaborators and sponsors.

The next year and a half saw us presenting to Microsoft’s Chief Accessibility Officer Jenny Lay-Flurrie, playtesting our games with blind employees and leaders within the company, travelling to Los Angeles for a consultation with master echolocator Daniel Kish, securing funding for our project expenses, and escaping to sunny San Diego to demo our games at the CSUN Assistive Technology Conference.

Of course, it also made for many frustrating weekends as we clumsily learned the brand-new art of professional game development.

Game development

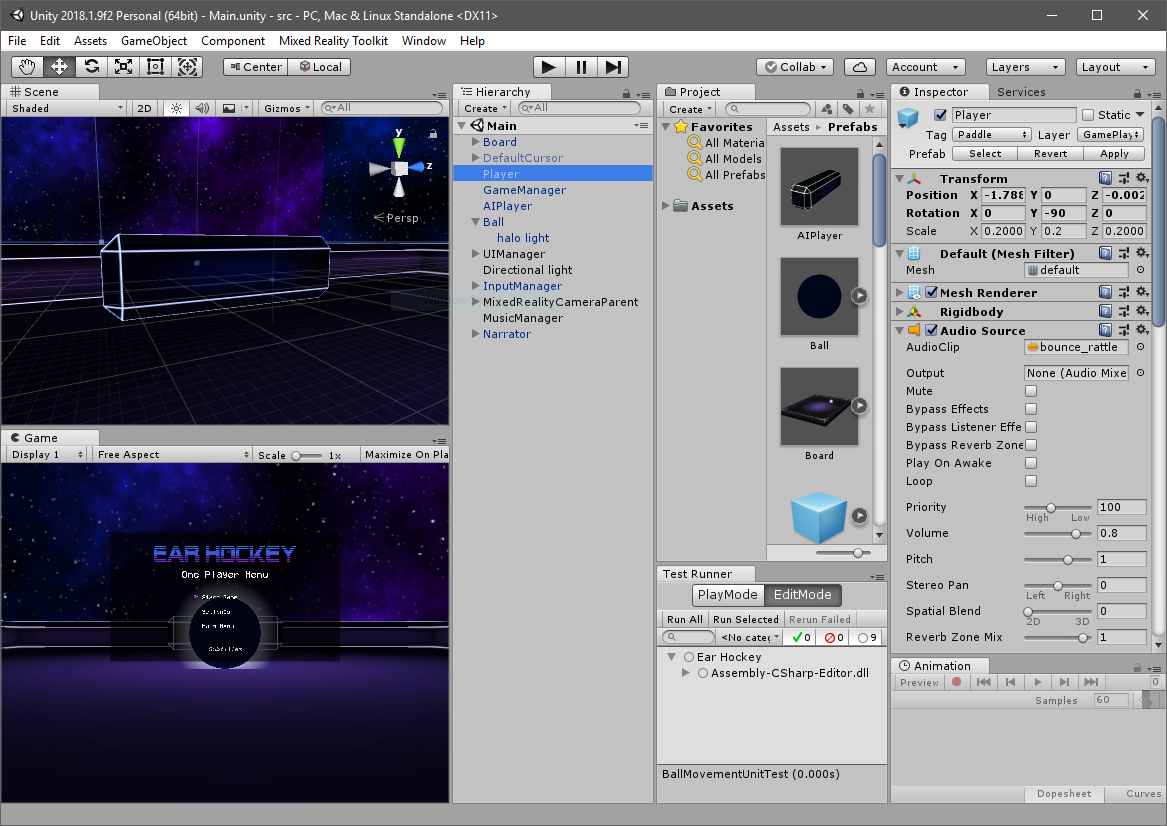

At the time, I had picked up some very basic game dev skills but had certainly never made a full game. We developed this game in Unity, a free game engine with a large community and lots of online tutorials. Unity projects consist of the editor, where you can import game objects and edit their settings in the UI, and C# scripts, which determine the behavior and settings of the game objects in code. This is where Boris and I did the bulk of our work.

I learned more about Unity than I ever thought I would need to know, and I definitely picked up some programming chops from Boris, who had more dev experience than I. I was very glad for that, and my next game project is sure to be a bit easier to pick up.

Audio

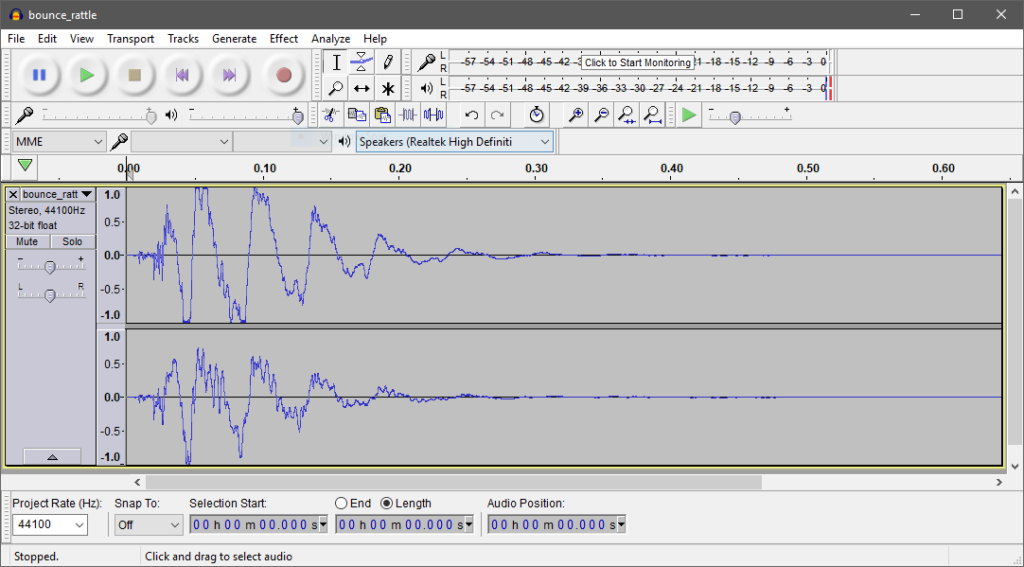

The audio is the most important part of this game. We used a variety of sound cues to help players stay oriented on the court and easily locate the ball at all times. We iterated on this process often, editing and changing sound effects based on user feedback. “Can you make it sound more whoosh-y?” The sounds needed to be intuitive (the ball bounce sounds like a “bounce”), diverse (different sounds aren’t confused for each other), and congruent (sounds share a common audio style).

We started with freely downloaded sounds that we edited in Audacity, and eventually we commissioned the original work of Mollie Ziegler, a professional musician and friend of one of our teammates. She did our final sound effects as well as the in-game narration and some very catchy background music.

Visuals

I made most of the visual art for this game, which I thoroughly enjoyed doing. Even though our target audience is blind and low-vision players, population numbers warrant that many of our players will be sighted, and the game’s visuals will inevitably be part of their experience.

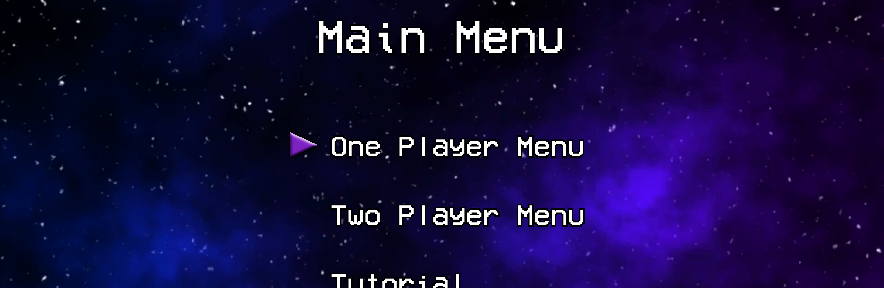

My goal for the visuals was to create a simple world that was entirely virtual (no real-world materials) to stick with the arcade-style theme of the game’s origin. My original color scheme was one that I hoped would encourage sighted players to close their eyes (and thus experience the game as it was meant to be played). I used uninteresting, faded textures and created a background inspired by what we see when our eyes are closed.

This idea evolved, and the team thought it would be better to make the game a little more visually attractive. We also got ideas from a graphics artist, Kate, who volunteered to take a look at our game and give suggestions. I switched to bolder colors and created a new background while retaining the overall simplicity from before. We also used Kate’s title graphic. The new look is suggestive of outer space, but it’s also clearly fantastical and virtual. It shows the user a world that’s totally unfamiliar but interesting, which is what sighted players can expect to experience in an audio-based game.

I also put together our app icon: an abstract representation of an invisible ball emitting sound as it travels down a plane. I did all of my visual artwork in Blender and Photoshop.

Final product: Ear Hockey

So, little by little, on weekends and evenings, we added all of the core features we wanted to add and fixed all the bugs that we could find. We released the game through the Microsoft Garage, “the outlet for experimental projects from teams across the company.” The Garage was a publishing pipeline that allowed us to list our game on the Microsoft Store under Microsoft’s name, but without all the red tape of a first-party title like Halo. There was still plenty of red tape, though, but our sponsors in the accessibility org were very helpful in coordinating all the tests and checks that needed to be done.

Now we’re published. As part of our subsequent promotion of the game, Boris and I got the chance to do an interview on TipsAndTricks, a gaming news channel on Mixer:

This whole project has been a great opportunity to build my game development skills, learn about accessibility, and create something fun and unique. We’re not finished though; there will be updates and new features to add. In the meantime, I hope you’ll give the game a try and let me know what you think. Be sure to use a good set of headphones for the full experience!